Replacing the AWS ELB - The Challenges

This is Part 2 in the Replacing the AWS ELB series.

- Replacing the AWS ELB - The Problem

- Replacing the AWS ELB - The Challenges(this post)

- Replacing the AWS ELB - The Design

- Replacing the AWS ELB - The Network Deep Dive

- Replacing the AWS ELB - Automation

- Replacing the AWS ELB - Final Thoughts

Now that you know the history from the previous post - I would like to dive into the challenges that I faced during the design process and how they were solved.

High Availability

One of the critical requirements was “Must not be a single point of failure” - which means whatever solution that we went with - must have some kind of High availability.

Deploying a highly available haproxy cluster (well it is a master/slave deployment - it cannot really scale) is not the that hard of a task to accomplish.

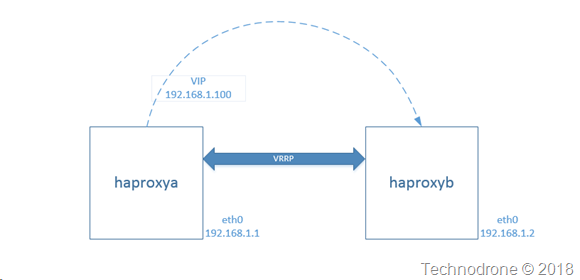

Here is a simple diagram to explain what is going on.

Two instances, each one has the haproxy software installed - and they each have their own IP address.

A virtual IP is configured for the cluster and and with keepalived we maintain the state between the two instances. Each of them is configured with a priority (to determine which one of them is the master/slave) and there is a heartbeat between them vrrp is used to maintain a virtual a virtual router (or interface between them). If the master goes down - then the slave will take over. When the master comes back up - then the slave will relinquish control back to the master.

This works - flawlessly.

Both haproxy’s have the same configuration - so if something falls over - then the second instance can (almost) instantly start serving traffic.

Problem #1 - VRRP

VRRP uses multicast - https://serverfault.com/questions/842357/keepalived-sends-both-unicast-and-multicast-vrrp-advertisements - but that was relatively simple to overcome - you can configure keepalived to use unicast - so that was one problem solved.

Problem #2 - Additional IP address

In order for this solution to work - we need an additional IP address - the VIP. How do you get an additional IP address in AWS - well that is well documented here - https://aws.amazon.com/premiumsupport/knowledge-center/secondary-private-ip-address/. Problem solved.

Problem #3 - High Availability

So we have the option to attach an additional ENI to the cluster - which would allow us to achieve something similar to what we have above - but this introduced a bigger problem.

All of this would only work in a single Availability Zone - which means that the AZ was a single point of failure - and therefore was in violation of requirement #2 - which would not work.

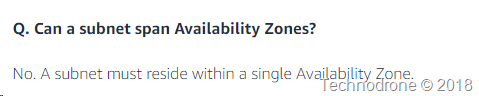

As it states clearly in the AWS documentation a subnet cannot span across multiple AZ’s

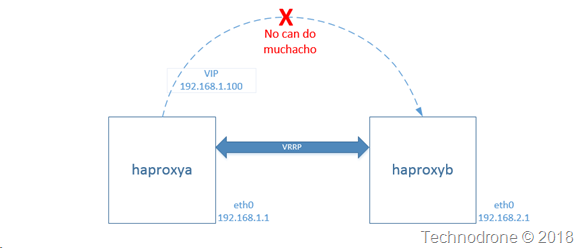

Which means this will not work..

Let me explain why not.

A network cannot span multiple AZ’s. That means if we want the solution deployed in multiple AZ’s - then it needs to be deployed across multiple subnets (192.168.1.0/24 and 192.168.2.0/24) each in their on AZ. The idea of taking a additional ENI from one of the subnets and using it as the VIP - will work only in a single AZ - because you cannot move the ENI from one subnet in AZ1 - to another subnet in AZ2.

This means that the solution of having a VIP in one of the subnets would not work.

Another solution would have to explored - because having both haproxy nodes in a single AZ - was more or less the same as having a single node not exactly the same but still subject to a complete outage if the the entire AZ would go down).

Problem #4 - Creating a VIP and allow it to traverse AZ’s

One of the biggest problems that I had to tackle was how do I get an IP address to traverse Availability zones?

The way this was done can be found in the next post.