Replacing the AWS ELB - Automation

This is Part 5 in the Replacing the AWS ELB series.

- Replacing the AWS ELB - The Problem

- Replacing the AWS ELB - The Challenges

- Replacing the AWS ELB - The Design

- Replacing the AWS ELB - The Network Deep Dive

- Replacing the AWS ELB - Automation (this post)

- Replacing the AWS ELB - Final Thoughts

It goes without saying that anything that I have described in the previous posts can be accomplished - it is just a really tedious work to go through all the stages when you are doing this manually.

Let’s have a look at the stages

- Create an IAM role with a specific policy that will allow you to execute commands from within the EC2 instances

- Create a security group that will allow the traffic to flow between and to your haproxy instances

- Deploy 2 EC2 instances - one in each availability zone

- Install the haproxy and keepalived on each of the instances

- Configure the correct scripts on each of the nodes (one for master and the other for slave) and setup the correct script for transferring ownership on each instance.

If you were to to all of this manually then this could probably take you a good 2-3 hours to set up a highly-available haproxy pair. And how long does it take to setup an AWS ELB? Less than 2 minutes? This of course is not viable - especially since it should be something that is automated and something that is easy to use.

This one will be a long post - so please bare with me - because I would like to explain in detail how this exactly works.

First and foremost - all the code for this post can be found here on GitHub - https://github.com/maishsk/replace-aws-elb (please feel free to contribute/raise issues/questions)

(Ansible was my tool of choice - because that is what I am currently working with - but this can also be done in any tool that you prefer).

The Ansible playbook is relatively simple

---

- name: Launch haproxy EC2 instances

hosts: localhost

connection: local

gather_facts: True

vars:

component_name: "{{ component_name }}"

stack_name: "{{ stack_name }}"

vars_files:

- vars/vars.yml

roles:

- iam_role

- secgroup

- ec2

- name: Set facts - allows build info to pass to hosts

hosts: localhost

connection: local

tasks:

- set_fact:

node0: "{{ ec2_ids.0 }}"

node1: "{{ ec2_ids.1 }}"

node0_ip: "{{ ec2_IPS.0 }}"

node1_ip: "{{ ec2_IPS.1 }}"

- name: Section - Routing

hosts: localhost

connection: local

vars_files:

- vars/vars.yml

tasks:

- name: Get Route table facts

ec2_vpc_route_table_facts:

region: "{{ region }}"

filters:

tag-key: Name

vpc-id: "{{ vpc_id }}"

register: vpc_rt_facts

- name: Store route tables for future use

set_fact:

route_table_ids="{{ vpc_rt_facts|json_query('route_tables[*].id') }}"

- name: Create Route for VIP

shell: |

/usr/bin/aws ec2 describe-route-tables --route-table-id {{ item }} | grep {{ virtual_ip }}

if [ $? -eq 0 ]; then

/usr/bin/aws ec2 delete-route --route-table-id {{ item }} --destination-cidr-block {{ virtual_ip }}/32

/usr/bin/aws ec2 create-route --route-table-id {{ item }} --destination-cidr-block {{ virtual_ip }}/32 --instance-id {{ node0 }}

else

/usr/bin/aws ec2 create-route --route-table-id {{ item }} --destination-cidr-block {{ virtual_ip }}/32 --instance-id {{ node0 }}

fi

with_items: "{{ route_table_ids }}"

- name: Configure nodes

hosts: ec2_hosts

user: ec2-user

become: yes

become_method: sudo

gather_facts: true

vars_files:

- vars/vars.yml

vars:

component_name: "{{ hostvars['localhost']['component_name'] }}"

virtual_ip: "{{ hostvars['localhost']['virtual_ip'] }}"

node0: "{{ hostvars['localhost']['node0'] }}"

node1: "{{ hostvars['localhost']['node1'] }}"

node0_ip: "{{ hostvars['localhost']['node0_ip'] }}"

node1_ip: "{{ hostvars['localhost']['node1_ip'] }}"

rt_table_id: "{{ hostvars['localhost']['route_table_ids']}}"

roles:

- haproxyPart one has 3 roles.

The part two - set’s up the correct routing that will send the traffic to the correct instance

The part three - goes into the instances themselves and sets up all the software.

Let’s dive into each of these.

Part One

In order to allow the haproxy instances to modify the route they will need access to the AWS API - this is what you should use an IAM role for. The two policy files you will need are here. Essentially for this - the only permissions that the instance will need are:

I chose to create this IAM role as a managed policy and not as a inline policy for some reasons that will be explained in a future blog post - both of these work - so you can choose whatever works for you.

Next was the security group - and the ingress rule I used here - was far too permissive - it opens the SG to all ports within the VPC - the reason that this was done was because the haproxy here was used to proxy a number of applications - on a significant number of ports - so the decision was to open all the ports on the instances. You should evaluate the correct security posture for your applications.

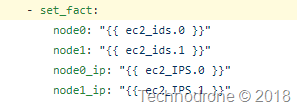

Last but not least - deploying the EC2 instances - pretty straight forward - except for the last part where I preserve a few bits of instance details for future use.

Part Two

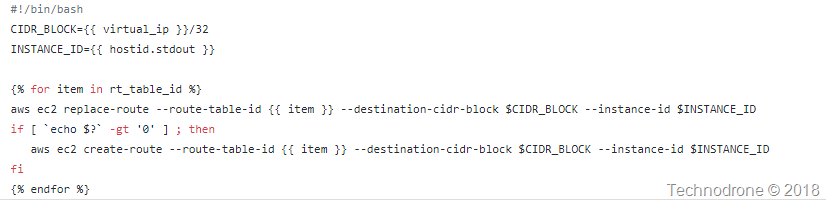

Here I get some information about all the rout tables in the VPC you are currently using. This is important because you will need to update the route table entries here for each of the entries. The reason that this is done through a shell script and not an Ansible module - was because the module does not support updates - only create or delete - which would made the process of collecting all the existing entries, storing them and them adding a new one to the list - was far too complicated. This is an Ansible limitation - and a simple way to get around it.

Part Three

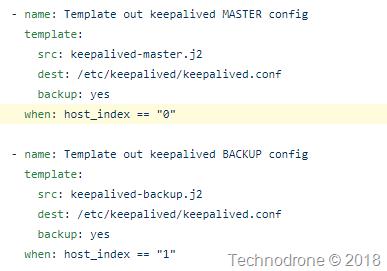

So the instances themselves have been provisioned. The whole idea of VRRP presumes that one of the nodes is a master and the other is the slave. The critical question is how did I decide what should be the master and which one would be the slave?

This was done here. When the instances are provisioned - they are provisioned in a random order, but they have a sequence in which they were provisioned - and it is possible to access this sequence - from this fact. I then exposed it in a simpler form here - for easier re-use.

Using this fact - I can now run some logic during the software installation based on the identity of the instance. you can see how this was done here.

The other part of where the identity of the node is used is in the jinja templates. the IP address of the node is injected into the file based on the identity.

And of course the script that the instance uses to update the route table uses facts and variables collected from different places throughout the playbook.

One last thing of course. The instance I used was the Amazon Linux - which means that the AWS cli is pre-installed. If you are using something else - then you will need to install the CLI on your own. The instances of course get their credentials from the IAM role that is attached, but when running an AWS cli command - you also need to provide an AWS region - otherwise - the command will fail. This is done with jinja (again) here.

One last thing - in order for haproxy to expose the logs - a few short commands are necessary.

Here you have a fully provisioned haproxy pair that will serve traffic internally with a single virtual IP.

Here is asciinema recording of the process - takes just of 3 minutes

In the last post - I will go into some of the thoughts and lessons learned during this whole exercise.