Storage vMotion - A Deep-Dive

Of all the features available with vSphere - one of the greatest features I like is Storage vMotion, which is described by VMware as follows:

In simple terms, vMotion allows you to move your VM from one host to another, Storage vMotion allows you to move your VM’s between different Storage arrays / LUNS that presented to you ESX Host. All without downtime (ok, one or two pings.. ).

I was looking to understand more on how this exactly works - so I looked up Kit Colbert’s Session from VMworld 2009 (ancient, I know but still a great source of information)

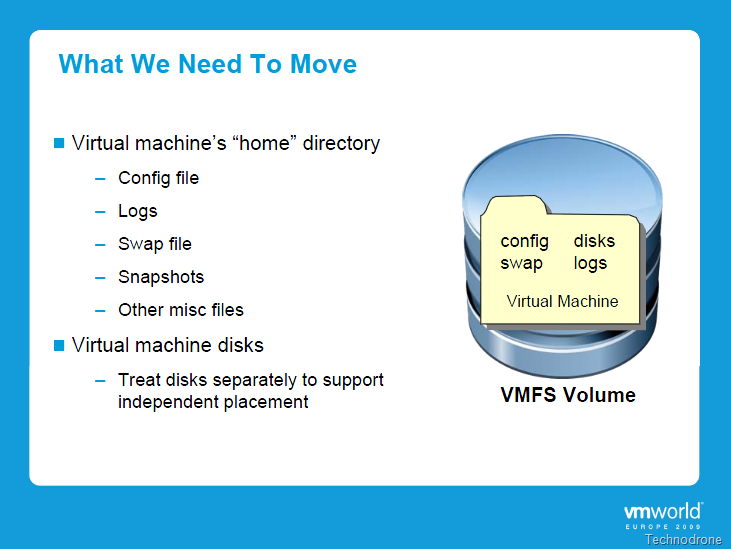

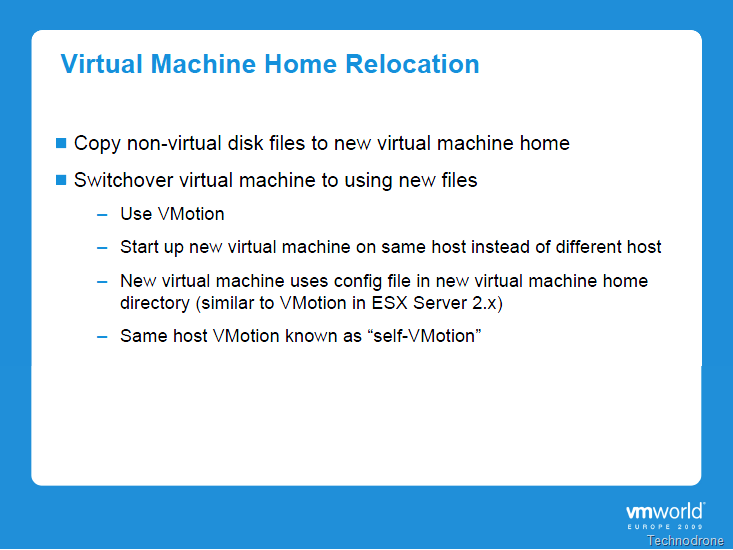

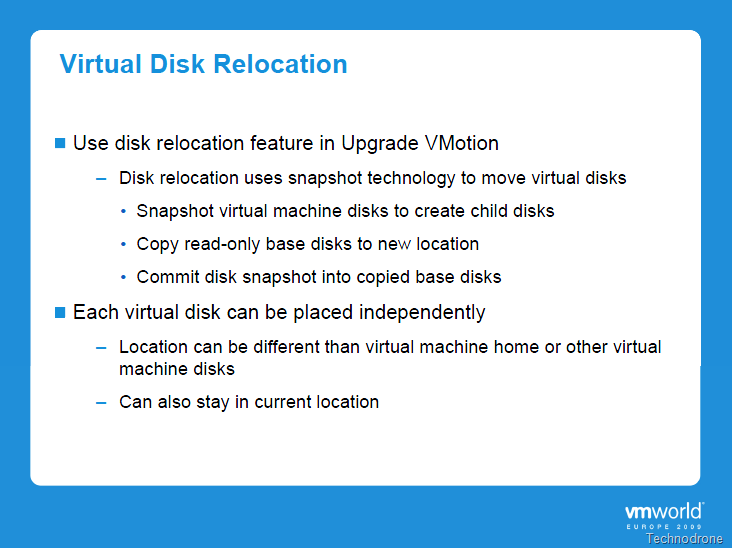

Borrowing some slides from Kit’s presentation we will try and understand a bit more.

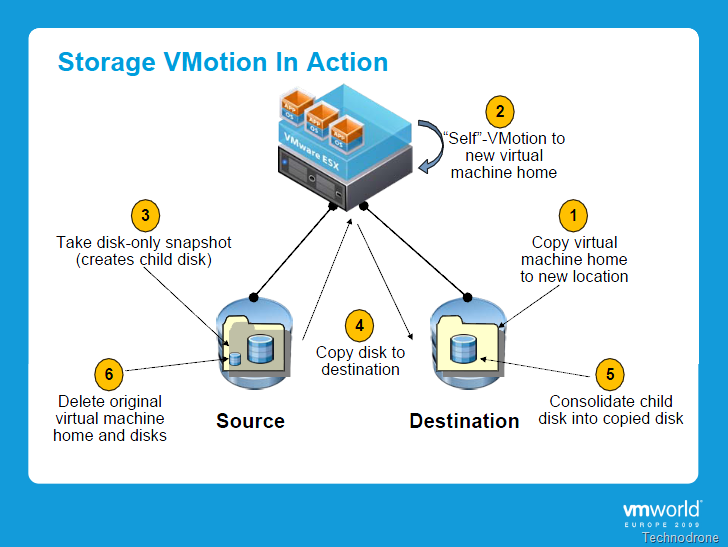

And how does this work?

That is all nice and fine - and now for a look under the covers - to see exactly what is happening.

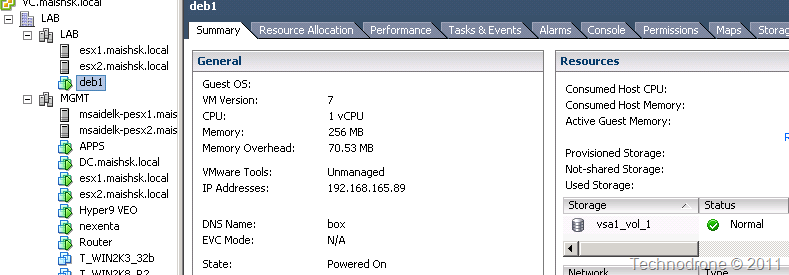

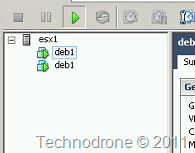

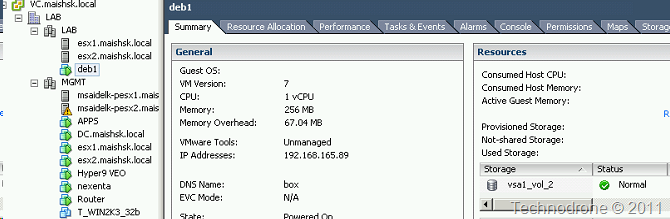

So we start a Storage vMotion of a VM named deb1 from vsa1_vol_1 to vsa1_vol_2

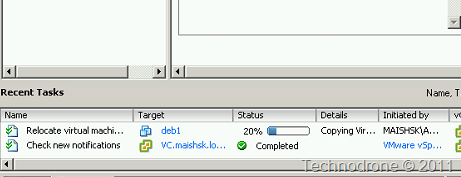

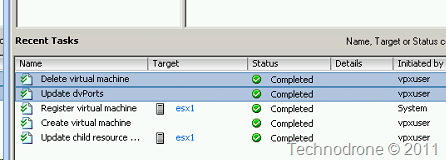

The task starts running as you can see in vCenter tasks.

But the real “magic” is happening on the Host itself.

Opening a vSphere client session to the host itself, we will see a new VM that is created.

Just before the the SvMotion is completed you will see that both machines co-exist for a short amount of time and both are powered on

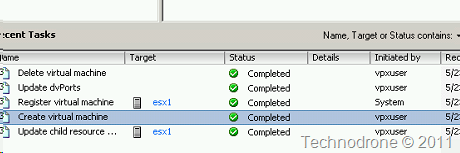

The switch is made and the old one is powered off and removed.

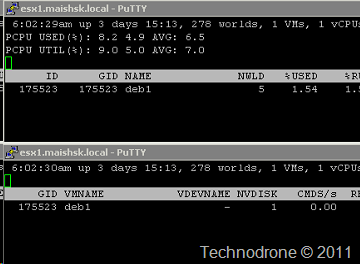

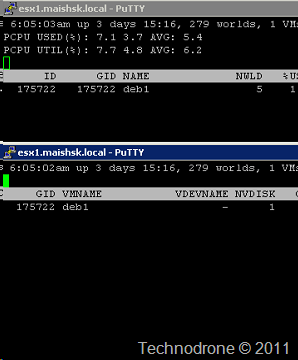

And from an esxtop perspective. Here you can see that there is one VM with an ID of 175523.

Start the SvMotion and there are two VM’s.

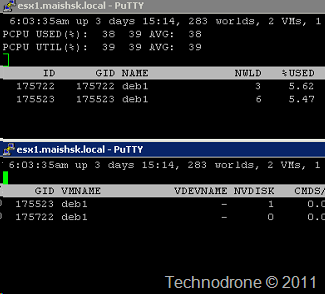

SvMotion completes and only the new VM with its new ID (175722) remains.

And the machine is now running from vsa1_vol_2.

And that is how sVmotion works.

**Update**

After receiving a message on Twitter from Emré Celebi with the following text,

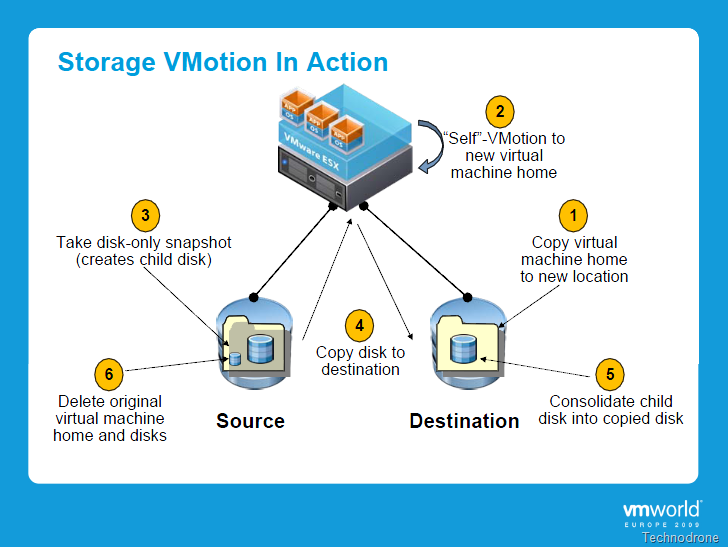

and also a comment from Duncan Epping, I realised that the information I posted was pertaining to ESX 3.5 and not 4.x.

So here is the correct technical document for 4.x.

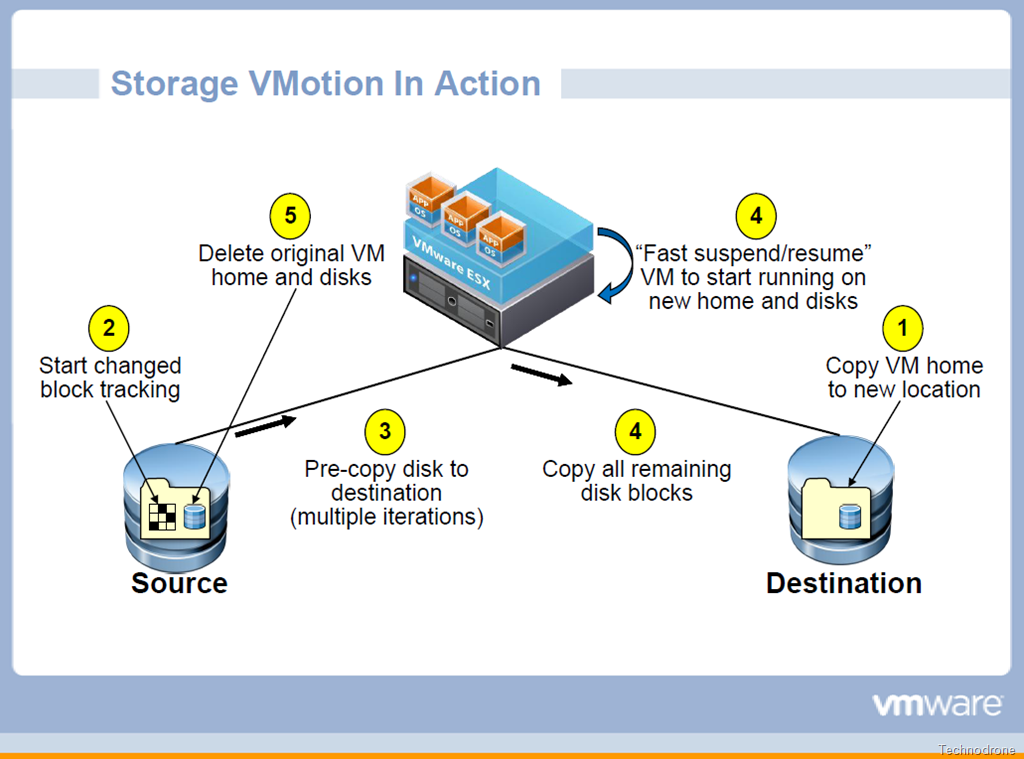

So what changed? CBT is now used to tracks the changes between the start of the process and the last stage just before the switch over. A good explanation on CBT here by Eric Siebert.

In this great session from Ali Mashtizadeh and Emre Celebi I learnt more about the process and how it now works in 4.x.

What are the differences?

Here is the process.

So How does the Changed Block Tracking come into play?

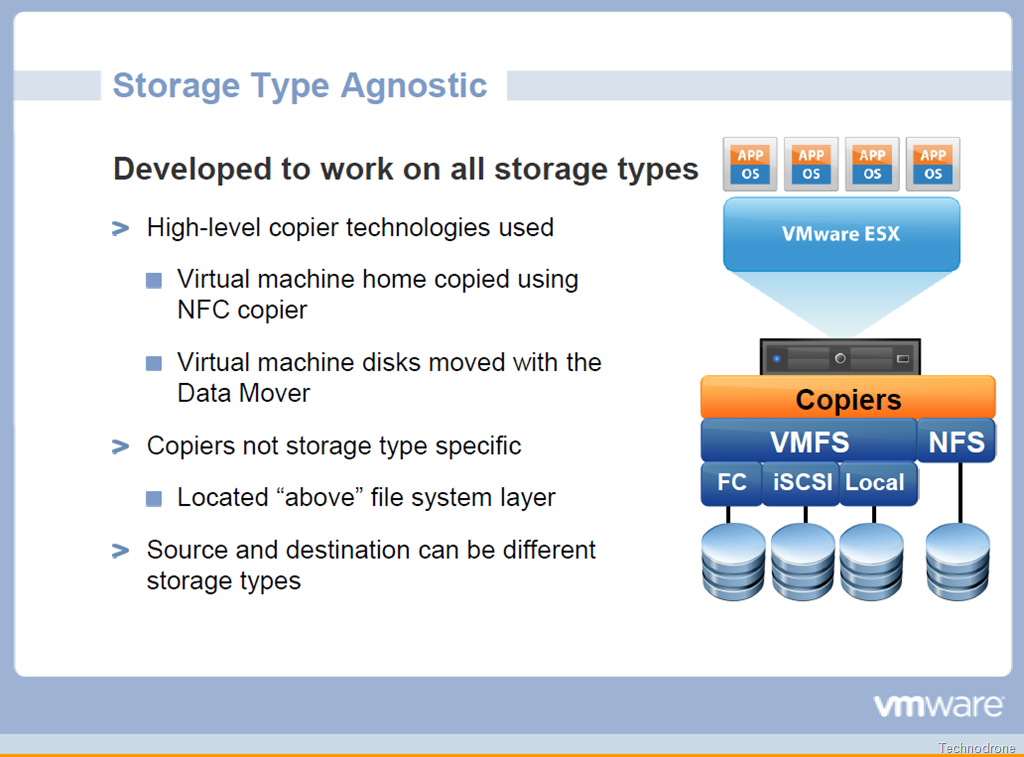

In 4.x VMware introduced the Data Mover which can also offload the Storage operations to the Storage Vendor with VAAI.

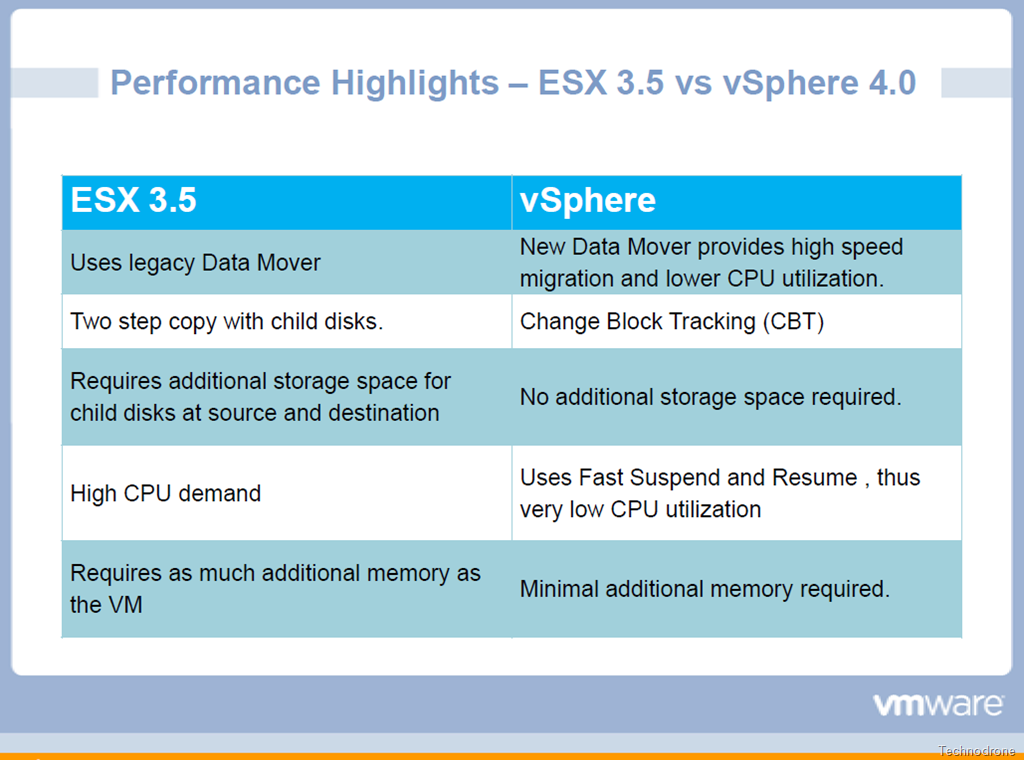

Comparing the Old and the New

In 4.x This is the process

- Start Storage vMotion

- Flag the disk, and start CBT checkpoint.

- Start pre-copy of the disk to destination in multiple iterations.

- Check which block have changed cince the check point and copy only those remaining blocks and use Fast Suspend/Resume for the switch over.

- Delete original.

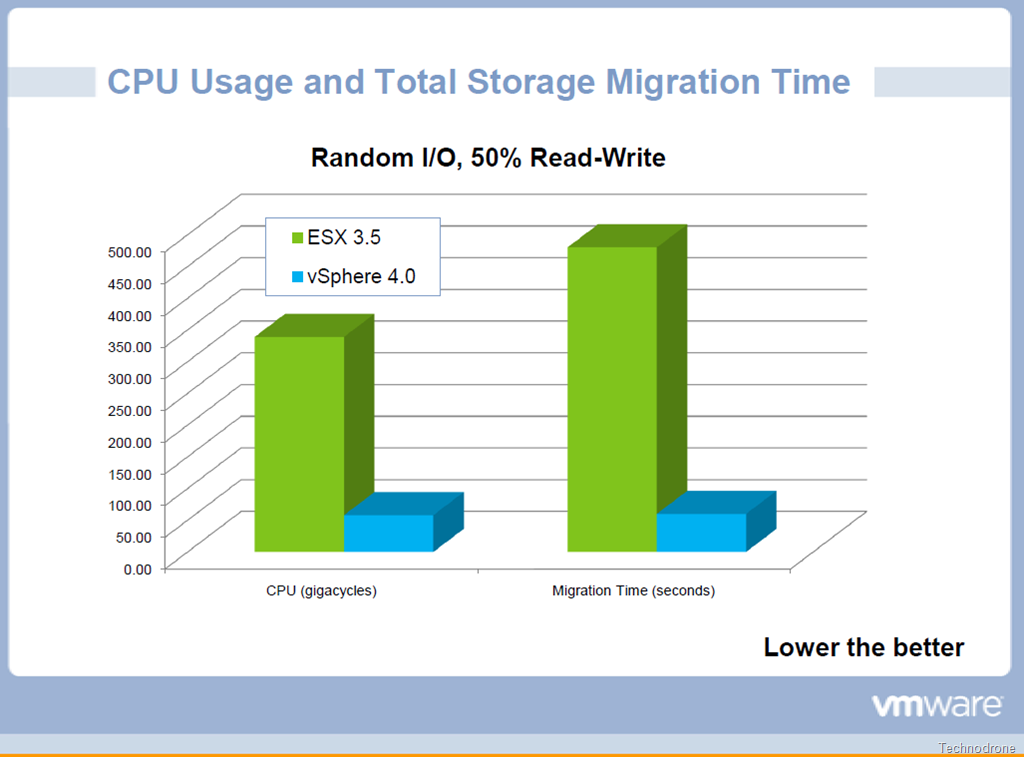

So how does this change performance-wise as compared to 3.5? As you can see below the performance gain is substantial both in ESX CPU cycles used during the process and the time needed for the process.

Just one last thing regarding troubleshooting.

The information in the VMworld Session goes in to more detail than I have done here - so I highly advise anyone who would like to understand the process in-depth - listen/watch the full session. It is free and an hour well spent.

The screenshots posted above showing the process are from a 4.x environment so they reflect the updated method.

Thanks again to Emré Celebi and Duncan Epping.