vMotion issues (78%)

In the current series of posts I am writing on running a vSphere lab on ESX 1, 2 and 3, I wanted to set up an NFS shared storage between my 2 ESX hosts to test vMotion.

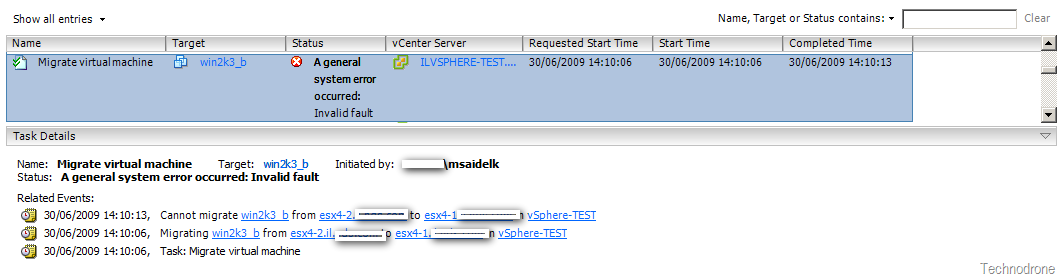

I ran into an interesting issue which I could hardly find any mention of on the web.

We all know that there are countless amount of posts about vMotion failing at 10% or failing at 90% but not anything about 78%. Well I hate to be picky, but this one was baffling me a bit. I found only only mention of this on the communities, but nothing else.

A bit more detail. I had connected two ESX hosts to an NFS share from Openfiler. There was no problem at all. Both hosts saw the storage. Created machines without any issues on both hosts. Only vMotion would fail – with a very ambiguous error.

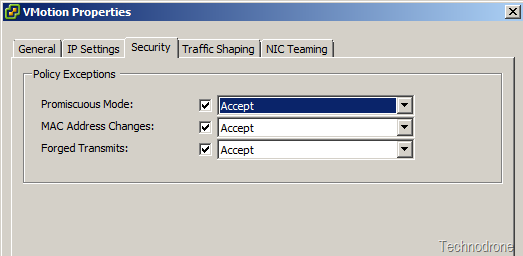

Every single time at 78%. At first I though it was because Promiscuous mode was not enabled on the NIC and on the vSwitch, so I changed that to enabled

Did not help.

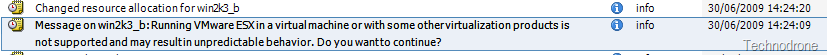

I tried to get information of of the VMware.log file of the VM, but the only things I could see were these:

Jun 30 13:34:04.615: vmx| Running VMware ESX in a virtual machine or with some other virtualization products is not supported and may result in unpredictable behavior. Do you want to continue?---------------------------------------So maybe that was the issue? I asked hany_michael and the_crooked_toe if they had any issues with vMotion like this but they did not, even though running a similar environment to mine. This line above was because I was running ESX as a VM, I would get it as well when powering on a VM but it would succeed.

I tried to go through the logs of the VM’s and was not getting more information from it either besides that it could not find the file on the new host.

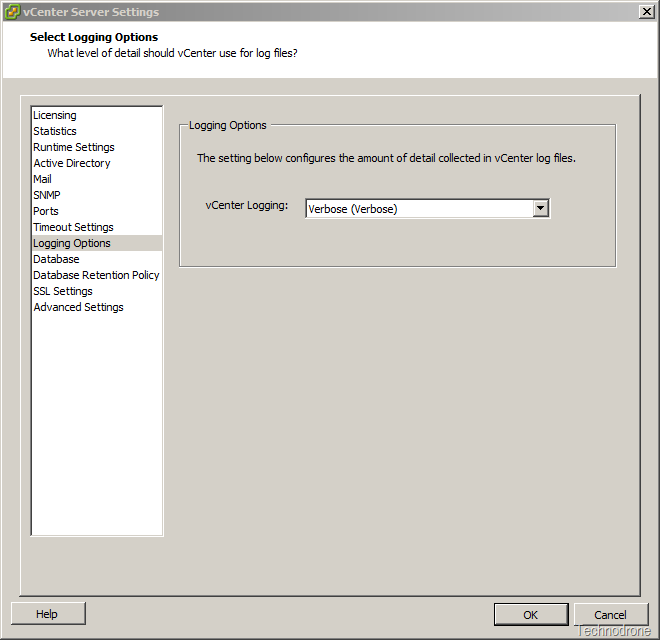

Turned on verbose logging on the vCenter

Did not get much either.

[2009-06-30 13:56:47.886 03756 error ‘App’] [MIGRATE] (1246359385573990) error while tracking VMotion progress (RuntimeFault)

Since this was NFS I started to dive into the vmkernel logs of the ESX hosts at /var/log/vmkernel and found this:

ESX4-1

Jun 30 12:24:40 esx4-2 vmkernel: 0:12:18:19.207 cpu1:4396)WARNING: Swap: vm 4396: 2457: Failed to open swap file '/volumes/c31eba3f-9dca625f/win2k3/win2k3-4aed76bf.vswp': Not found

Jun 30 13:34:05 esx4-2 vmkernel: 0:13:27:43.732 cpu1:4433)WARNING: VMotion: 3414: 1246358033497547 D: Failed to reopen swap on destination: Not foundESX4-2

Jun 30 13:14:55 esx4-1 vmkernel: 0:14:45:00.526 cpu1:4462)WARNING: Swap: vm 4462: 2457: Failed to open swap file '/volumes/c861a58d-45816333/win2k3_b/win2k3_b-65841149.vswp': Not found

Jun 30 13:14:55 esx4-1 vmkernel: 0:14:45:00.526 cpu1:4462)WARNING: VMotion: 3414: 1246356880465431 D: Failed to reopen swap on destination: Not found

Jun 30 13:14:55 esx4-1 vmkernel: 0:14:45:00.526 cpu1:4462)WARNING: Migrate: 295: 1246356880465431 D: Failed: Not found (0xbad0003)@0x41800da0e0d5Now why would it not find the swap file? I mean both of the hosts are connected to the same storage.

Or were they??

Look at the log again.

ESX4-1 - Failed to open swap file '/volumes/**c861a58d-45816333**/win2k3/win2k3-4aed76bf.vswp'

ESX4-2 - Failed to open swap file '/volumes/**c31eba3f-9dca625f**/win2k3/win2k3-4aed76bf.vswp'See the difference? But how could that be? I remembered that I had run into this issue once before. Let me explain what was happening here. During vMotion the memory state of the VM is transferred from one ESX to the other. In the vmx file of the VM there is a configuration setting as to where the swap is located

sched.swap.derivedName = "/vmfs/volumes/c31eba3f-9dca625f/win2k3/win2k3-4aed76bf.vswp"

When the receiving host is ready to finalize the transfer, it has to take this file to read the swap memory of the VM. This is the only hard-coded path in a VM configuration file, and since the hosts were not seeing the same path, the machine would not migrate.

How did this happen?

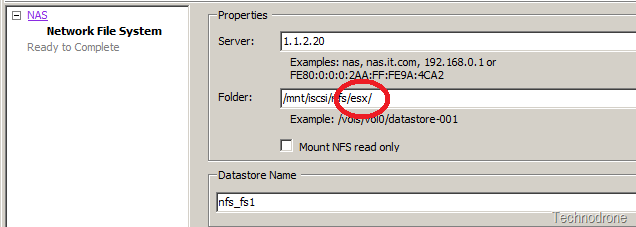

When creating the datastores I did one from the GUI,

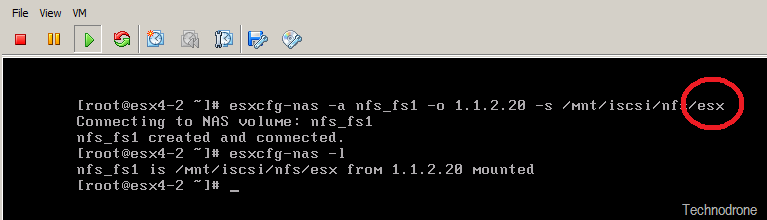

and one from the command line.

Subtle difference of a / but that is what made all the difference.

I removed the volume from one ESX server, created it again and now the output from both hosts

[root@esx4-1 ~]# ls -la /vmfs/volumes/

total 1028

drwxr-xr-x 1 root root 512 Jul 1 16:27 .

drwxrwxrwt 1 root root 512 Jun 30 14:16 ..

drwxr-xr-t 1 root root 1120 Jun 21 12:16 4a3dfa4c-17137398-672b-000c299e8aed

drwxrwsrwx 1 96 96 4096 Jul 1 00:16 c31eba3f-9dca625f

lrwxr-xr-x 1 root root 35 Jul 1 16:27 Local-ESX4-1 -> 4a3dfa4c-17137398-672b-000c299e8aed

lrwxr-xr-x 1 root root 17 Jul 1 16:27 nfs_fs1 -> **c31eba3f-9dca625f**

[root@esx4-2 win2k3]# ls -la /vmfs/volumes/

total 1028

drwxr-xr-x 1 root root 512 Jul 1 16:27 .

drwxrwxrwt 1 root root 512 Jun 30 00:07 ..

drwxr-xr-t 1 root root 1120 Jun 21 14:05 4a3e1408-450c30b1-ab94-000c293f26d7

drwxrwsrwx 1 96 96 4096 Jul 1 00:16 c31eba3f-9dca625f

lrwxr-xr-x 1 root root 35 Jul 1 16:27 Local-ESX4-2 -> 4a3e1408-450c30b1-ab94-000c293f26d7

lrwxr-xr-x 1 root root 17 Jul 1 16:27 nfs_fs1 -> **c31eba3f-9dca625f**2 lessons I learned from this.

- Sometimes you cannot find an answer for everything on the internet – especially if you are using a new product (ESX4) and no-one has had these problems before

- Automate! Automate! Automate! When doing things with scripts, then you are less prone to errors like the one I made above.

Hope you enjoyed the ride!