How much RAM for an ESX server - a.k.a. Lego Blocks

I started to read the sample chapters that Scott Lowe released from his upcoming book, and one of the parts were about the subject of scaling up vs. scaling out.

A slight bit more of an explanation as to what I mean by this. Should I buy bigger more monstrous servers, or a greater number of smaller servers?

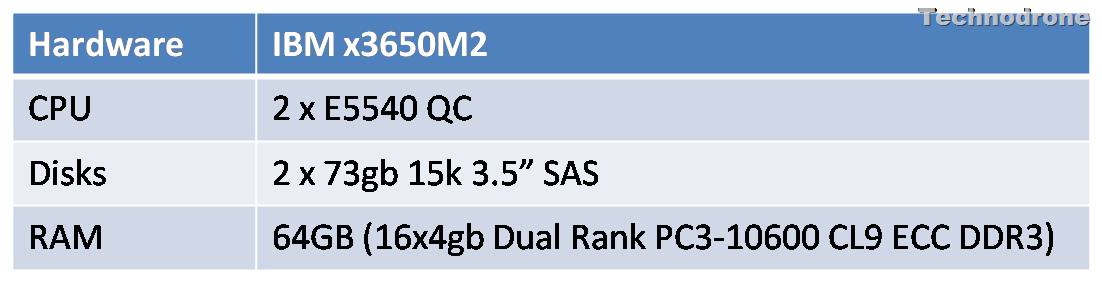

Let us take a sample case study. We have an environment that has sized the following:

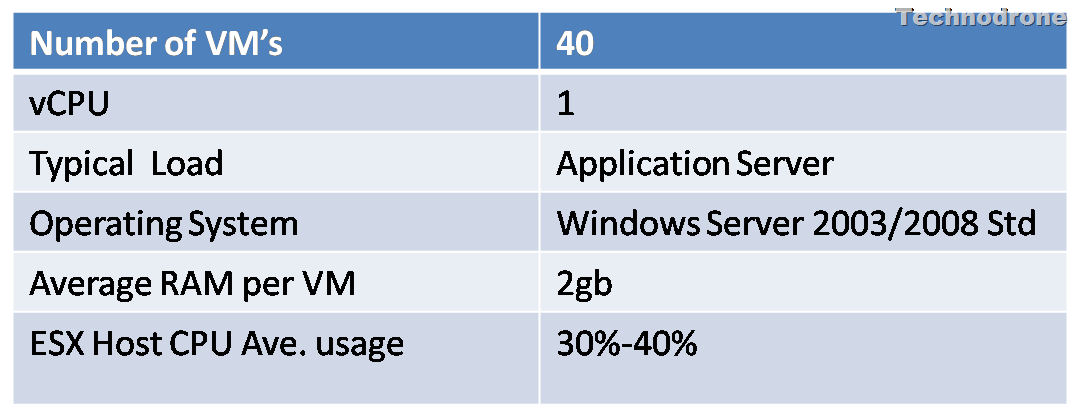

On this hardware an organization has sized their server’s capacity as:

The estimate of 40 Virtual Machines per host is pretty conservative, but for arguments sake let’s say that is the requirements that came from the client. The projected amount of VM’s - up to 200.

Which hardware should be used to to host these virtual machines? I am not talking about if it should be a Blade or a Rack mount, and also not which Vendor, IBM,HP,Dell or other.I am talking about more about what should go into the hardware for each server. And in particular for this post what would be the best amount of RAM per server.

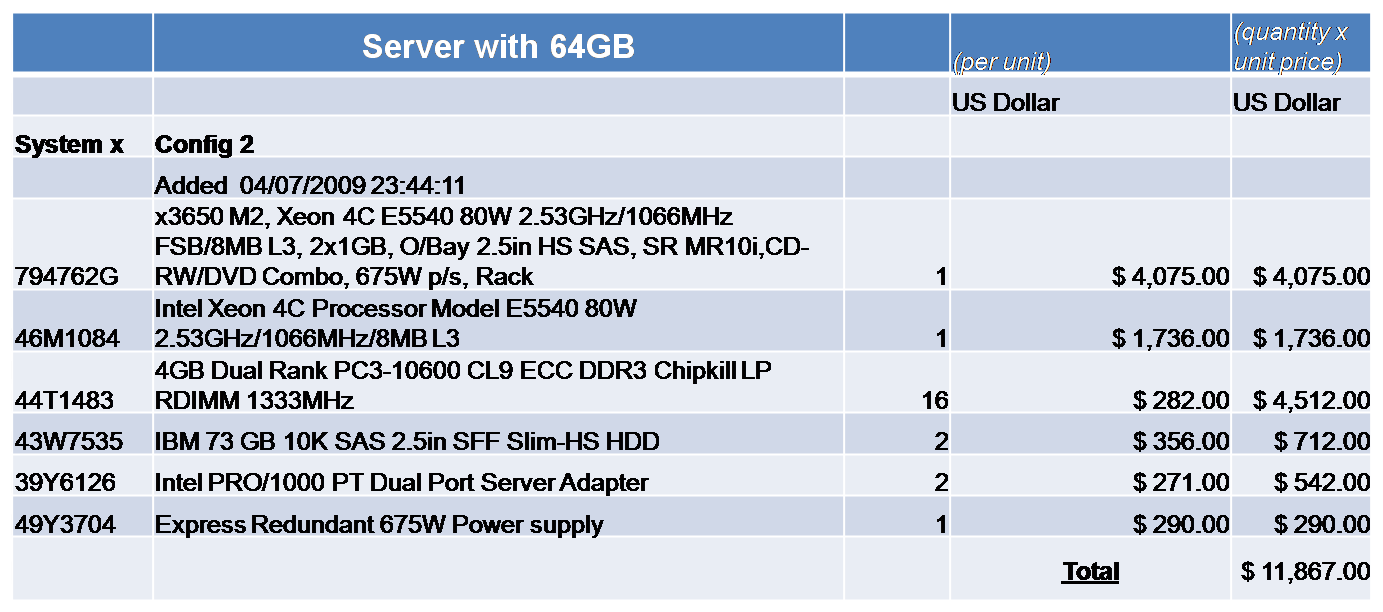

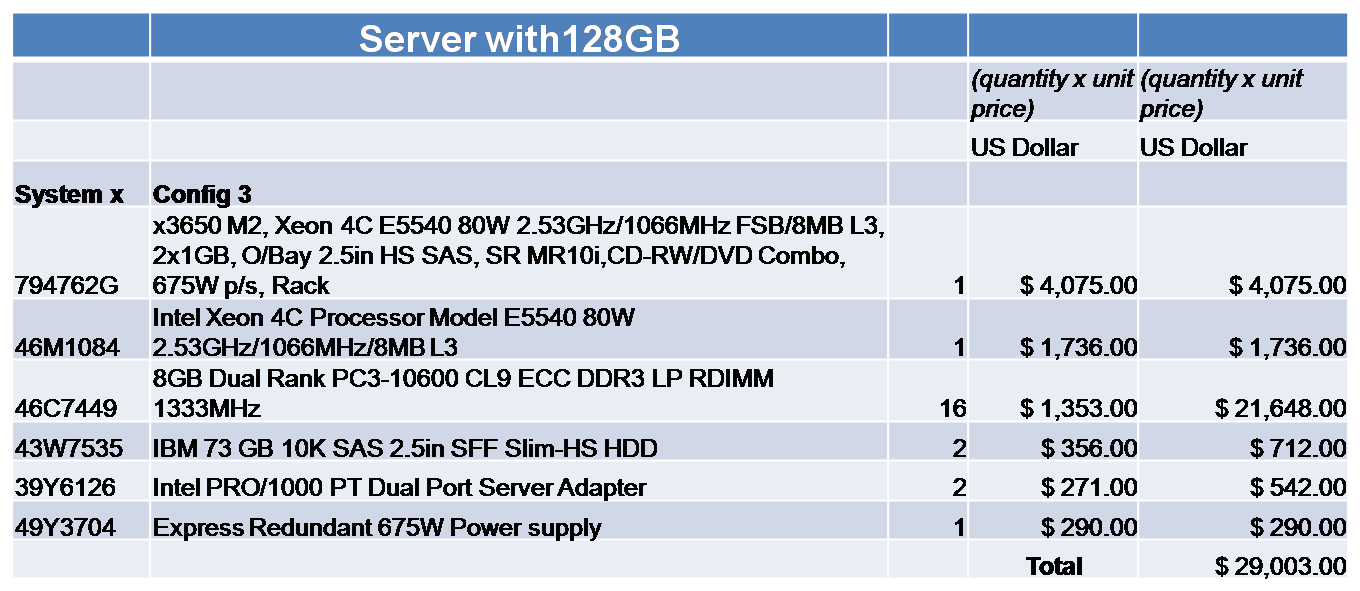

From my experience of my current environment that I manage, the bottleneck we hit first is always RAM. Our servers are performing at 60%-70% utilization of RAM, but only 30%-40% CPU utilization per server. And from what I have been hearing from the virtualization community - the feeling is generally the same. I wanted to compare what would be the optimal configuration for a server. Each server was a 2U IBM x3650 with 2 72GB Hard disks (for ESX OS), 2 Power supply’s, 2 Intel PRO/1000T Dual NIC adapters. Shared Storage is the same for both Servers, so that is not something that I take into the equation here.The only difference between them was the amount of RAM in the servers.All the prices and part numbers are up to date from IBM, done with a tool called the

IBM Standalone Solutions Configuration Tool (SSCT). The tool is updated once/twice a month and is extremely useful in configuring and pricing my servers.

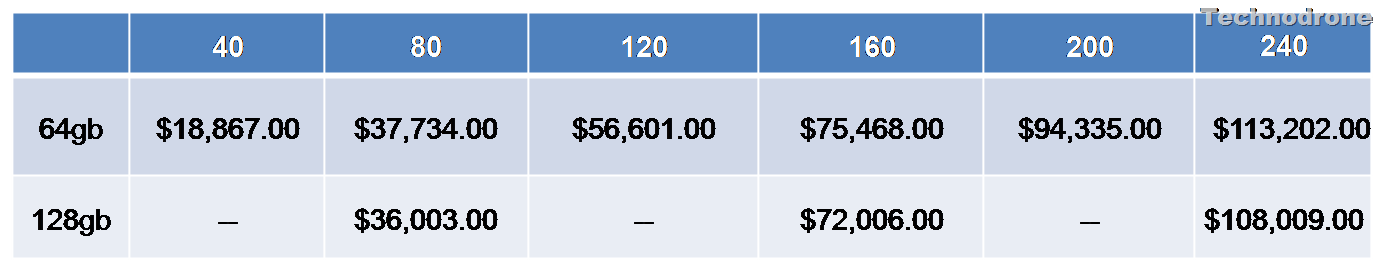

Now the first thing that hit me was - the sheer amount of difference in Server price. I mean I added 100% more RAM to the server, but the price of the server went up by almost 300%. That is because the 8GB chips are so expensive. Now I took building blocks of 40 VM’s. To each block I added an ESX Ent. Plus License - an additional cost of $7,000. I assumed that vCenter was already in place, so this was not a factor in my calculations.The table below compares the two servers in blocks of 40 VM’s.

Now you can always claim that a server with 80 VM’s use a lot more CPU than a server with only 40. But you were paying attention the beginning of the post, the load on a server with 40 VM’s was going to be 30-40%, and therefore doubling it would bring the load up to 60-80%. which was well in acceptable limits. As you can see from the table above - the 128GB server came out cheaper on every level that could be compared to the 64GB server. Now of course I am not mentioning the savings in the reduction of the Physical Hardware, rack space, electricity, cooling - we all know the benefits.

So what did I learn from this exercise?

- Even though the price might seem scary - and a lot to pay for one server - in some cases it does pay off, if you do your calculations and planning.

- 8GB Ram is expensive!

- Every situation is different - so you have to do your planning per your requirements.

- I liked the idea of building blocks.

- You have other considerations such as HA that will determine how many VM’s you can cram onto one host - see Duncan’s post on this subject

Now of course you can project this kind of calculation on any kind of configuration be it difference in RAM, HBA’s, NIC’s etc.

Your thoughts and comments are always welcome.