Setting a timeout for an Amazon ECS task

Sometimes you just want your application to run, but only for a certain amount of time, there are a number of reasons you might want to do this. It could be that that you do not want to spend too much time or money completing a n operation (letting things run until eternity, firstly would take a hell of a long time, but it could also rack up a good amount spend in your cloud account). You also could have underlying services and dependencies that might time out while waiting for your task to complete, so you set an upper limit on how long your task can run.

When using AWS Lambda, there is a built-in maximum duration for the amount of time your function can run, 15 minutes. This is a limitation, but also in some ways a blessing. It forces you, the application owner/architect, to adhere to a finite limit of time that your application can run, and design your architecture appropriately. There have been many customer conversations that I have been in over the years, where I have said, “If you need to run something for longer than 30 minutes, then run it in a container, the easiest would be in ECS”. But the other side of this is, there is no way to limit the amount of time a task can run in ECS. Customers have been asking for this for a while and here as well, and recently a colleague of mine, Massimo Referre, posted a solution that will enable you to run your task for a finite amount of time. Here is the blog post - Configuring a timeout for Amazon ECS tasks

It is actually quite a clever solution which uses Amazon EventBridge and AWS Step functions. Essentially what you do is you put a specific tag on your task with the timeout duration, and the Step Functions state machine will stop the task after that defined period.

Today, I would like to propose a different solution to the problem. I do want to state that this is a workaround, and when this becomes available as a full feature in ECS, you should use that. But until then…

First the Why??

Even though the solution above provides a good solution to the problem, it does require you to understand two additional AWS services, Amazon EventBridge and AWS Step Functions. There is a certain amount of overhead to this, learning a new service, it’s API’s, understanding Amazon States Language and also getting to know each of these services cost structure as well. For some customers, this could be too much and they prefer keeping it all in-house within ECS.

Next, the How..

ECS has the concept of what is called an “essential container”. An essential container is a parameter that you configure as part of your task definition.

Here is the documentation where you can find more details.

essential

Type: Boolean

Required: no

If the essential parameter of a container is marked as true, and that container fails or stops for any reason, all other containers that are part of the task are stopped. If the essential parameter of a container is marked as false, then its failure doesn’t affect the rest of the containers in a task. If this parameter is omitted, a container is assumed to be essential.

All tasks must have at least one essential container. If you have an application that’s composed of multiple containers, group containers that are used for a common purpose into components, and separate the different components into multiple task definitions. For more information, see Application architecture.

A task definition can container more than one container, and more than one container can be marked as essential. The first container in the Task Definition is marked as essential by default.

How is this going to solve the problem? By adding another container to your task definition that will exit after a defined period of time, will provide you am option to set a timeout for the task.

I would like to point out a few caveats.

- This solution does have additional overhead, it is minimal, but still there.

- This requires change to your task definition revision, and there are people who prefer to manage this from outside of ECS, and if that is the case, have a look at Massimo’s solution above.

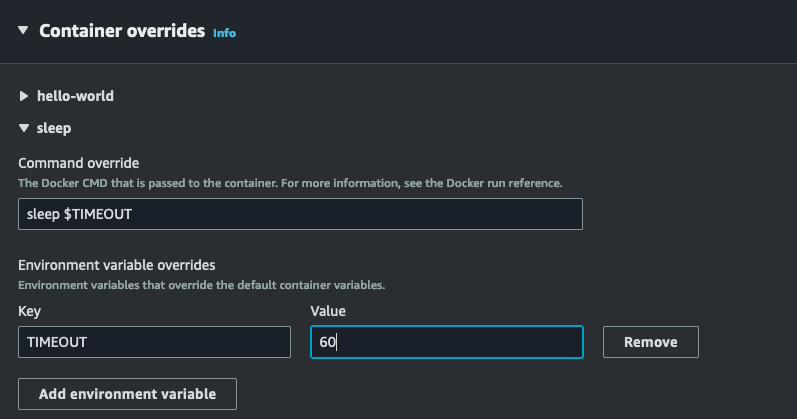

- This requires you pass an environment variable to the

sleepcontainer (in my case I named itTIMEOUT), which means you need to either configure the value in the task definition (which you will need to do when you launch a service), or when you specify the variable and value when launching a standalone task.

The What..

The easiest way to accomplish this is with a sleep statement. You run a command inside your container with the sleep command and a duration.

In order make this as generic as possible and to rely on already existing images that are publicly available, I chose to do this with the busybox image. The image is under 5MB in size, so it really small, and lightweight

~ ❯ docker images | grep busy

public.ecr.aws/docker/library/busybox 1.36.0 af2c3e96bcf1 2 days ago 4.86MB

public.ecr.aws/docker/library/busybox latest af2c3e96bcf1 2 days ago 4.86MB

In order to allow you to maintain the most amount of flexibility, it would be ideal to pass the value of your timeout to container when launching it.

Let’s have a look at how this would work in practice with an example task definition

{

"taskDefinitionArn": "arn:aws:ecs:us-west-2:218616270196:task-definition/sleep-test:2",

"containerDefinitions": [

{

"name": "hello-world",

"image": "nginxdemos/hello",

"cpu": 0,

"portMappings": [

{

"name": "hello-world-80-tcp",

"containerPort": 80,

"hostPort": 80,

"protocol": "tcp",

"appProtocol": "http"

}

],

"essential": true,

"environment": [],

"environmentFiles": [],

"mountPoints": [],

"volumesFrom": [],

"ulimits": [],

"logConfiguration": {

"logDriver": "awslogs",

"options": {

"awslogs-group": "/ecs/sleep-test",

"awslogs-create-group": "true",

"awslogs-region": "us-west-2",

"awslogs-stream-prefix": "ecs"

}

}

},

{

"name": "sleep",

"image": "public.ecr.aws/docker/library/busybox:latest",

"cpu": 0,

"portMappings": [],

"essential": true,

"entryPoint": [

"sh",

"-c"

],

"command": [

"sleep $TIMEOUT"

],

"environment": [

{

"name": "TIMEOUT",

"value": "60"

}

],

"environmentFiles": [],

"mountPoints": [],

"volumesFrom": [],

"logConfiguration": {

"logDriver": "awslogs",

"options": {

"awslogs-group": "/ecs/sleep-test",

"awslogs-create-group": "true",

"awslogs-region": "us-west-2",

"awslogs-stream-prefix": "ecs"

}

}

}

],

"family": "sleep-test",

"executionRoleArn": "arn:aws:iam::218616270196:role/ecsTaskExecutionRole",

"networkMode": "awsvpc",

"revision": 2,

"volumes": [],

"status": "ACTIVE",

"requiresAttributes": [

{

"name": "com.amazonaws.ecs.capability.logging-driver.awslogs"

},

{

"name": "ecs.capability.execution-role-awslogs"

},

{

"name": "com.amazonaws.ecs.capability.docker-remote-api.1.19"

},

{

"name": "com.amazonaws.ecs.capability.docker-remote-api.1.18"

},

{

"name": "ecs.capability.task-eni"

},

{

"name": "com.amazonaws.ecs.capability.docker-remote-api.1.29"

}

],

"placementConstraints": [],

"compatibilities": [

"EC2",

"FARGATE"

],

"requiresCompatibilities": [

"EC2",

"FARGATE"

],

"cpu": "1024",

"memory": "3072",

"runtimePlatform": {

"cpuArchitecture": "X86_64",

"operatingSystemFamily": "LINUX"

},

"tags": []

}

I have two containerDefinitions in my task definition. The first one is for my NGINX container, that prints “Hello World”, it exposes port 80 and is marked as essential. My second container is my sleep container. it uses the busybox image from ECR public, it is also marked as essential. It does not expose any ports, but it does have an entryPoint and command configured. The only thing it does is run the sleep command with a value. You will notice that the sleep command contains a $TIMEOUT variable, which is not defined in the task definition, but is rather passed to the runTask API during runtime as environment variable. You can do this through the CLI or in the console.

Here is an example

Here is what this looks like in action

Please leave your feedback on the github issue, if this is useful or you have any thoughts.